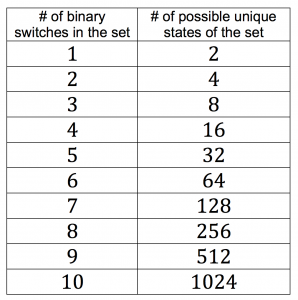

The notion of “data types” is probably the most underrated concept outside of computer science that I can think of right now. Briefly, computers use “typed variables” to represent numbers internally. All numbers are internally represented as a collection of “binary digits” or “bits” (a concept introduced by the underrated genius John Tukey, who also gave us the LSD test and the fast Fourier transform, among other useful things), more commonly known to the general public as “zeroes and ones”. An electric switch can either be on (1) or off (0) – usually implemented by voltages that are either high or low. So as a computer ultimately represents all numbers as a collection of zeroes and ones, the question is how many of them are used. A set of 8 bits make up a “byte”, usually the smallest unit of memory. So with one byte of memory, we can represent 2^8 or 256 distinct states of switch positions, i.e. from 00000000 (all off) to |||||||| (all on), and everything in between. And that is what data types are building off of. For instance, an “integer” takes up one byte in memory, so we can represent 256 distinct states (usually numbers from 0 to 255) with an integer. Other data types such as “single precision” take up 32 bits (=4 bytes) and can represent over 4 billion states (2^32) whereas “double precision” that are represented by 64 bits (=8 bytes of memory) and that can represent even more states. In contrast, the smallest possible data type is a Boolean, which can technically be represented by a single bit and that can only represent two states (0 and 1), which is often used when checking conditionals (“if these conditions are true (=1), do this. If they are not true (or 0), do something else”).

Each switch by itself can represent 2 states, 0 (“false”, represented by voltage off) and 1 (“true”, represented by voltage on). Left column: This corresponds to the k in 2^k . Right column: This corresponds to the number of unique states that this set of binary switches can represent.

Note that all computer memory is finite (and used to be expensive), so memory economy is paramount. Do you really need to represent every pixel in an image as a double or can you get away with an integer? How many shades of grey can you distinguish anyway? If the answer is “probably less than 256”, then you can save 87.5% of memory by representing the pixel as an integer, compared to representing it as a double. If the answer is that you want to go for maximal contrast, and “black” vs. “white” are the only states you want to represent (no shades of grey), then booleans will do to represent your pixels.

But computer memory has gotten cheap and is getting ever cheaper, so why is this still an important consideration?

Because I’m starting to suspect that something similar is going on for cognition and cognitive economy in humans and other organisms. Life is complicated and I wonder how that complexity is represented in human memory. How much nuance does long term memory allow for? Phenomena like the Mandela effect might suggest that the answer is “not much”. Perhaps long term memory only allows for the most sparse, caricature-like representation of objects (“he was for it” or “he was against it”, “the policy was good” or “the policy was bad”). Maybe this is even a feature to avoid subtle nuance-drift over time and keep the representation relatively stable over time, once encoded in long term memory.

But the issue doesn’t seem to be restricted to long term memory. On the contrary. There is a certain simplicity that really doesn’t seem suitable to represent the complexity of reality in all of its nuances, not even close, but people seem to be drawn to it. In fact, often the dictum “the simpler the better” seems to have a particular draw. This goes for personality types (I am willing to bet that much of the popularity of the MBTI in the face of a shocking lack of reliability can be attributed to the fact that it promises to explain the complexity of human interactions with a mere 16 types – or a 4 bit representation), horoscopes (again, it would be nice to be able to predict anything meaningful about human behavior with a mere 12 zodiac signs (3.5 bit (if bit were non-integers))), racism (maybe there are 4-8 major races, and thus can be represented with 2-3 bits), and sexism (biological sex used to be conventionally represented with a single bit). There is now even a 2-bit representation of personality that is rapidly gaining popularity – one that is based on the 4 blood types, and that has no validity whatsoever. But this kind of simplicity is hard to beat. In other words, all of these are “low memory plays”. If there is even a modicum of understanding about the world to be gained from such a low memory representation (perhaps even well within the realms of “purely felt effectiveness”, from the perspective of the individual, given the effects of confirmation bias, etc.), it should appeal to people in general, and to those who are memory-limited in particular.

Given this account, what remains puzzling – however – is that this kind of almost deliberate lack-of-nuance is even celebrated by those who should know better, i.e. people who are educated and smart enough that they don’t *have to* compromise and represent the world in this way, yet seem to do it anyway: For instance, there are some types of research where preregistration makes a lot of sense. If only to finally close the file drawer. Medication development comes to mind. But there are also some types where it makes less sense and some types where it makes no sense (e.g. creative research on newly emerging topics at the cutting edge of science) – so how appropriate it actually is mostly depends on your research. Surely, it must be possible for sophisticated people to keep a more nuanced position than a purely binary one (“preregistration good, no preregistration bad”) in their head. This goes for other somewhat sophisticated positions where tribalism rules the roost, e.g. “R good, SPSS bad” (reality: This depends entirely on your skill level) or “Python good, Matlab bad” (reality: Depends on what you want – and can – do) or “p-values bad, Bayes good” (reality: Depends on how much data you have and how good your priors are). And so on…

Part of the reason these dichotomies for otherwise sophisticated topics are so popular must then lie in the fact that such a low-memory, low-nuance representation – after all, it even takes 6 bits to represent a mere 49 shades of grey and 49 shades isn’t really all that much – has other hidden benefits. One is perhaps that it optimally preserves action potential (no course of action is easier to adjudicate than a binary choice – you don’t need to be an octopus to represent these 2 options) and it engenders tribalism and group cohesion (assuming for the sake of argument that this is actually a good thing). A boolean representation has more action potential and is more conducive to tribalism than a complex and nuanced one, so that’s perhaps what most people instinctively stick with…

But – and I think that is often forgotten in all of this – action potential and group cohesion nonwithstanding, there are hidden benefits to be able to represent a complex world in sufficient nuance as well. Choosing a data type that is too coarse might end up representing a worldview that is plagued by undersampling and suffers from aliasing. In other words, you might be able to act fast and decisively, but end up doing the wrong thing because you picked from two alternatives that were not without alternative – you fell prey to a false dichotomy. If a lot is at stake, this could matter tremendously.

In other words, even the cognitive utility of booleans and other low memory data types is not clear cut – sometimes they are adequate, and sometimes they are not. Which is another win for nuanced datatypes. Ironically? Because if they are superior, maybe it is a binary choice after all. Or not. Depending on the dimensionality of the space one is evaluating all of this in. And whether it is stationary. And so on.