Western culture – the US in particular – is pervaded by the notion of achievement through hard work. This has many benefits, but like everything, it comes at a steep cost.

One of the things that is typically shortchanged in the relentless drive for achievement is the need for rest and recovery, sleep being a particular instance of this need. If you want to run the world and be part of the elite, you naturally don’t have time or even a need to sleep.

Thus, it should not come as a surprise that sleep is to self-proclaimed high achievers like studying is to high-school and college students – it is essential to long term success, but no one admits to doing much of it.

Just ask Tiger Woods – he admits to sleeping a mere 4-5 hours per night, even though it is common knowledge that elite athletes have increased sleep needs, on the order of 10 hours or more. Of course, we now all know what he is doing during the rest of the time.

Be that as it may be, it is common knowledge that great men have better things to do than to sleep – Da Vinci, Napoleon*, Edison have claimed sleep durations of well under 5 hours per night. After all, there is plenty of time to rest after one is dead, as common knowledge has it. This is not an exclusively American trait, either. A lot of cultures that place a similarly great value on achievement have similar sayings. But why?

That is quite simple. The downside to sleeping is obvious – for practical purposes, physical time is a completely nonelastic commodity. In other words, as a resource, time as a resource really does live in a zero-sum universe. Everyone has the same amount of time, and as one becomes more and more accomplished, one’s time becomes more and more valuable. Sleeping might be the single most expensive thing – due to forgone earnings – these people do on any given day. It would make good economic sense to cut back on it. If one is sleeping one can’t do anything and can’t react to anything. Indeed, the absence of motor output is one of the defining characteristics of sleep.

The upside of sleep is much harder to pin down. Ask any scientist why one needs to sleep and few will give a non-hedged answer that doesn’t amount to some form of “we don’t really know”.

The problem is that with this readily apparent downside and no clear upsides, the rational response is to limit sleep in whichever way possible.

Many people do exactly that – in the past 100 years, the average sleep duration per night in the US has dropped from around 8 hours to under 6.75 hours. This is achieved with the pervasive use of artificial lighting in combination with using all available kinds of stimulants, from Modafinil to Caffeine. People try to further cut corners with gimmicks like polyphasic sleep. The problem with this approach is that it is neither efficient, nor sustainable.

Why not? This is best illustrated by a concept called “sleep debt”. Like all debt, it tends to accumulate and even accrue interest. In other words, every time sleep is truncated or delayed with a stimulant like caffeine, a short-term loan is taken out. It will have to be repaid later.

But why? Because we sleep for very good reasons. It is important to realize that most scientist hedge their answers for purely epistemological reasons. And just because they do that doesn’t mean that very good reasons don’t exist. They do.

A strong hint comes from the fact that all animals studied – with no known exceptions – do in fact sleep. What’s more, they typically sleep as much as they can get away with, given the constraints of their lifestyle and habitat. As a general rule, predators sleep longer than prey animals (think cats vs. horses). Animals that are strict vegetarians can’t afford to sleep as long, as they have to forage longer to acquire their food which is less energy dense. But they all do sleep and they all sleep as much as they reasonably can.

And this is in fact quite rational. One significant constraint is posed by the need to minimize energy expenditures. An animal in locomotion expends dramatically more energy per unit weight and time than a sedentary one. This is an odd thought from the perspective of the modern world, with its ample food supplies and readily available refrigerators. But there are very few overweight animals in the wild. In other words, every calorie that is potentially obtained by foraging or predation has to be gained by expending and investing calories in locomotion. This is a precarious balance indeed. All of life depends on it.

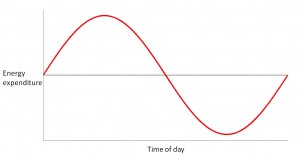

Now if you were to design an organism that has to win in life (aka the struggle for survival and reproduction) would you arrange things such that the “engine” runs constantly at the same level or would you rather build it so that it periodically overclocks itself to unsustainable levels in order to best the competition temporarily then recover by downgrading performance during periods when little competition is expected?

Modulating performance by time of day might be beneficial

The rational and optimal answer to that question depends on outside conditions – can this period of little competition be predicted and expected? As it turns out, it can. Day and night cycles on this planet – dominating the external environment by establishing heat and light levels – have been fairly stable for several hundreds of millions of years. Consequently, pretty much all animal life on the planet has adapted to this periodicity. It is only for the past 200 years that man tried in earnest to wrest control over this state of affairs.

And we did. As warm blooded animals, we no longer strictly depend on heat from the sun to survive, but air conditioning and heating systems are quite nice to keep a fairly stable “temperate” environment. The same thing goes for light. I can have my light on 24/7 if I am able to afford the electricity and if I so choose. Lack of calories is also not a problem whatsoever. On the contrary.

So what is the problem? The problem is that evolution rarely lets a good idea go to waste. Instead, full advantage is taken of it for other purposes (exaptation). The forced downtime that was built into all of our systems by a couple hundred million years of evolution was put to plenty of other good uses. Just because locomotion is prevented doesn’t mean that the organism won’t take this opportunity to run all kinds of “cron jobs” in order to prepare it for more efficient future action. To further elaborate on the metaphor, the brain is not excluded from running a bunch of these scripts. On the contrary.

If you deprive the body of sleep, you are consequently depriving it of an opportunity to run these repair and prepare tasks. You might be able to get away with it once, or for a while, but over time, the system will start to fall apart. Ongoing and thorough system maintenance is not optional if peak performance is to be sustained. Efficiency will necessarily degrade over time if one keeps cutting corners in this department.

Of course, this is a vicious cycle. No one respects sleep (sleeping is for losers, see above), so sleeping is not an option. Instead, one resorts to stimulants to prop up the system and get tasks done. That makes the need for sleep (think maintenance and restoration) even greater, but the ability to sleep even less so, as tasks keep getting done less and less efficiently. This is truly a downward spiral. At the end of all of this, there is a steady state of highly stimulated, unrested and relatively unexceptional achievement. This is the state to be avoided, but probably the one that a high percentage of people finds themselves in, at any given time.

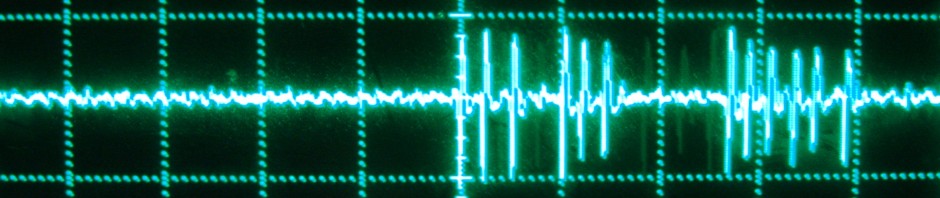

There is ample evidence that the cognitive benefits of sleep are legion. There is also a wide range of metabolic and physiological benefits. Here, I would to mention some extremely well documented cognitive ones explicitly. Briefly, sleep is essential for learning and memory consolidation, creative problem solving and insight as well as self control. This is not surprising. Peak mental performance is costly in terms of energy. Firing an action potential requires quite a bit of ATP (actually mostly the sodium-potassium pump that restores the status quo ante). It has been argued that these rapid energy needs cannot plausibly be met by glucose alone. Conversely, they must be met by glycolisis, which is also what supplies the energy to the muscles of a sprinter. It is precisely this brain glycogen that is depleted by prolonged wakefulness. Without sleep, the message of this mechanism is clear: No more mental sprints for you. Stimulants can mobilize some reserves for a while, but in the long run, your well energy will necessarily run dry. This is undone by sleep.

Moreover, it seems that amyloid beta is accumulating during wake. This is important because levels of amyloid beta are also increased in Alzheimer’s disease. The jury is still out, but I would not be surprised if a causal link between chronic sleep deprivation and dementia was in fact established. In the meantime, it might be wise to play it safe.

Another important hint at the crucial importance of sleep for neural function comes from the fact that your neurons will get their sleep one way or the other, synchronized or not. Sleep is characterized by synchronized activity of large scale neural populations. Recently, it has been shown that individual neurons can “sleep” on their own under conditions of sleep deprivation. Perhaps this correlates with the subjective feeling of sand in the mental gears when there hasn’t been enough sleep. The significance of this is that sleep deprivation is futile. If the need for sleep becomes pressing, some neurons will get their rest after all, but in a rather uncoordinated fashion and maybe while operating heavy machinery. Not safe. There is a reason why sleep renders one immobile.

Finally, some practical considerations. How to get enough sleep?

Here are some pointers:

- If you must consume caffeine, do so early in the morning. It has a rather long metabolic half-life.

- Try to enforce “sleep hygiene”. No reading or TV in the bed. No reading upsetting emails before going to bed. Try to associate the bedroom with sleep and establish a routine. If you can’t sleep, get up.

- If you live in a noisy environment, invest in some premium earplugs. Custom fitted ones are well worth it.

- If at all possible, try to rise and go to bed roughly at the same time.

- Due to the reasons outlined above, a lot of circadian rhythms are coordinated and

Having breakfast

synchronized with light. Of course, we are doing it all wrong if we ignore this. Light control is crucial. This means several things. Due to the nature of the human phase response curve, bright lights have the ability to shift circadian rhythms in predictable ways. Short wavelength (blue) light is a particular offender, as the intrinsically light sensitive ganglion cells in the retina are sensitive in this range of the spectrum. In other words, no blue light after sunset. This is particularly true if you suffer from DSPS (delayed sleep phase disorder – and the suffering is to be taken literal in this case). There are plenty of free and very effective apps which will denude your computer screen (bright enough to have a serious effect) from blue lights. I recommend f.lux. It is not overdoing it to replace the light bulbs in your house with those that lack a blue component. Conversely, you *want* to expose yourself to bright blue light in the morning. The notion that annoying sounds wake us up is ludicrous. The way to physiologically wake up the brain in the morning is to stimulate with bright blue lights. I use a battery of goLites for this purposes. Looking at them peripherally is sufficient. The photoreceptors in question are in the periphery.

Warning: If you have any bipolar tendencies whatsoever, please be very careful using bright or blue lights. Even at short exposure durations, these can trigger what is known as a “dysphoric mania” – a state that is closely associated with aggression against yourself or others (and one of the most dangerous states there is). If you try it at all, do not do so without close supervision. Perhaps ironically, those suffering from bipolar tendencies might be among the most tempted to fix their sleep cycle this way, as they are so sensitive to light and “normal” artificial light at night shifts their sleep cycle backwards. For this population, using appropriate glasses (these can even be worn over existing glasses) is a much more suitable – and safer – option. I repeat, there is *nothing* light about light. Nothing.

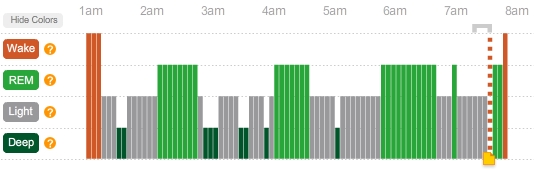

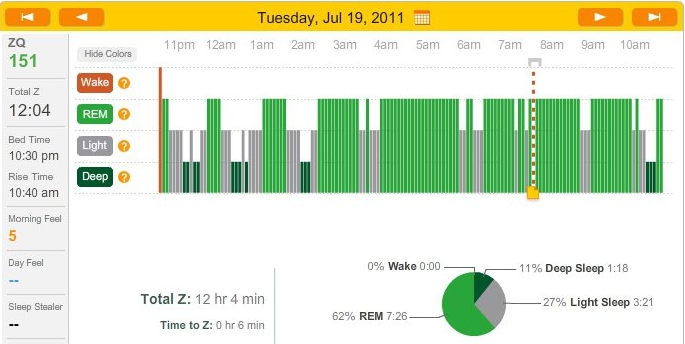

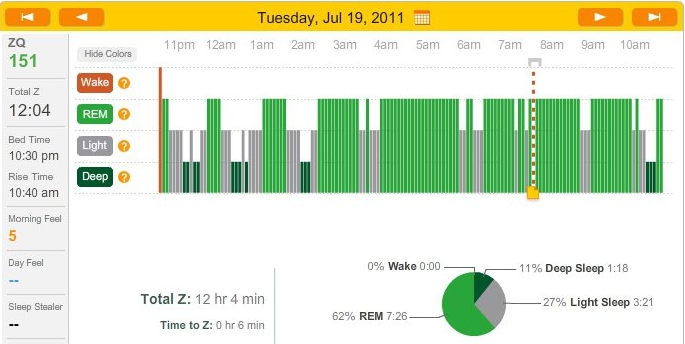

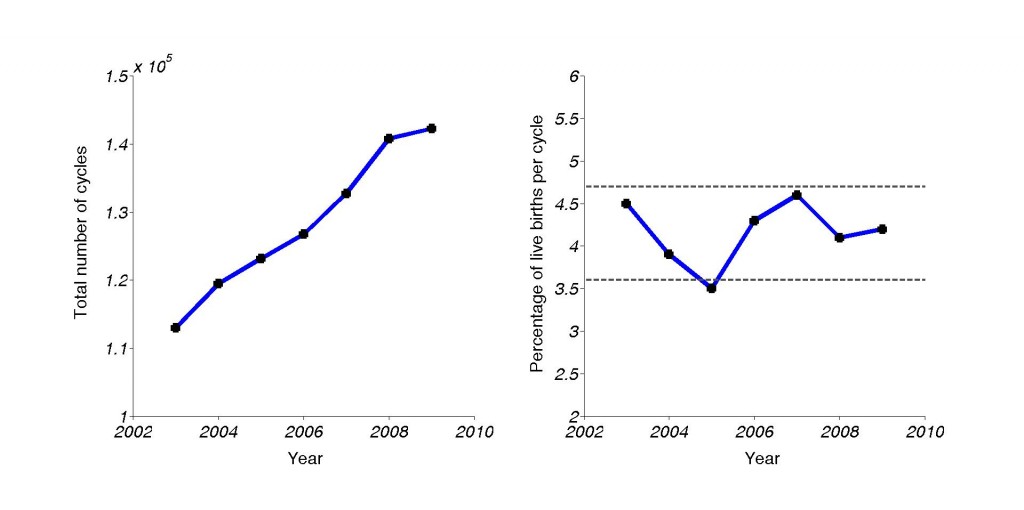

To conclude, how do you know that this effort is worth it? It might take a while to normalize your brain and mind after decades of chronic sleep deprivation. In the meantime, I recommend to monitor your sleep on a daily basis. There are now devices available that have sufficient reliability to do this in a valid way. Personally, I use the ZEO device.

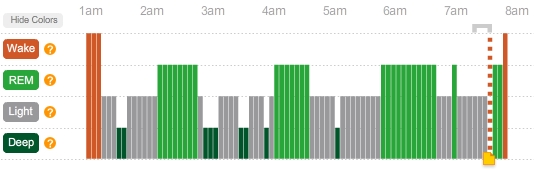

A typical night, as seen by ZEO.

This approach has two advantages. First of all, it makes you mindful of the fact that very important work is in fact done at night. Your brain produces interesting patterns of activity. You are typically biased to dismiss this because you are not awake and aware of it when it happens. This device visualizes it. Second, the downside of disturbed sleep becomes much more apparent. You can readily see what works and what doesn’t (e.g. in terms of the recommendations above). In other words, you can literally learn how to sleep. You probably never did. And once you’ve done so, you can sleep like a winner. And then maybe even go and do great things.

Sleeping like a winner. #beatmyZQ

__________

I recently delivered this content as a talk in the ETS (Essential tools for scientists) series at NYU. The bottom line is the importance to respect one’s sleep needs, even – and in particular – if someone happens to be a scientist. A summary of the talk and slides can be found here.

*There is very little question that Napoleon suffered from Bipolar disorder (making – by extension – the rest of Europe suffer from it as well). It is true that he slept 5 hours or less during his manic episodes, but he slept up to 16 hours a day during depressive ones.

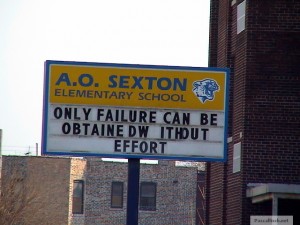

Meditations on the proper handling of pigs

Popular wisdom is not short on advice on how to handle pigs. This goes back to at least biblical times, which counseled that one should

The point here is that the pigs – being pigs – will not be able to appreciate the pearls for what they are. They mean nothing to them. But one’s own supply of pearls will be rather limited and on top of that, one probably expects something in return for parting with a pearl. In this scenario, no one wins. Not the pigs, and not the pearl caster. So don’t do it.

Actual pigs

Our cultural obsession with pigs does not stop with the bible. On the contrary, pigs are the metaphorical animal of choice for morally and cognitively corrupt characters such as Stalinists, popularized by Orwell’s “Animal Farm”. Of course, none of this is limited to pigs. We like to use animals metaphorically, from black swans (the book is *much* better than the movie) to hedgehogs and foxes.

But back to pigs.

As an old Irish proverb has it:

Observing most of what passes for public discourse these days, this seems to have been forgotten. It is important to remember this as I do not believe that the rules of engagement for public fights with pigs have changed all that much since time immemorial. I do understand that it drives ratings, but it is not all that helpful. Or at least, one should know what to expect.

A corollary of this – as observed by Heinlein – is that one should

To be clear, the idea is that one wastes one’s time because it won’t work, due to inability or unwillingness on behalf of the pig. And it is not restricted to singing, either. Other have pointed out that it is equally pointless – and even harmful – to try to teach pigs how to fly. A lot of this has been summarized and recast into principles by Dale Carnegie.

So how *should* one interact with pigs? As far as I can tell, the sole sensible practical advice – bacon nonwithstanding – is that the only way to win is not to play, when it comes to pigs.

How relevant this is depends on how many pigs there are and how easy it is to distinguish them from non-pigs. Sadly, the population of proverbial pigs seems to be ever growing, likely due to the fact that societal success shields them from evolutionary pressures imposed by reality (to make matters worse, a lot of the pigs also seem to have mastered the art of camouflage). In this sense, a society that is successful in most conceivable ways is self-limiting, as it creates undesirable social evolution because the pigs can get away with it, polluting the commons with their obnoxious behavior (although the original commons was a sheep issue). This will – in the long run – need to be countered with a second order cultural evolution in order to stave off the inevitable societal crash that comes from the pigs taking over completely.

This is a task for an organized social movement. In the meantime, how should individuals handle pigs? Probably by recognizing them for what they are (pigs) and recognizing that they probably can’t help being pigs. And not have unreasonable expectations. It could be worse. In another fable, the punchline is that one should not be surprised if a snake bites you because that is what snakes do.

The point is that we now live in a social environment that we are not well evolved for. Does our humanity scale for it? In a social environment many individuals and diverse positions, verbal battles can be expected to be frequent, but there are no clear victory conditions. It is commonly believed that the pen is mightier than the sword, but in an adverse exchange (particularly on the internet), arguments are very rarely sufficient to change anyone’s mind, no matter how compelling. So the “swine maneuver” can be used to flag such an exchange, and perhaps allow the parties to disengage gracefully, disarm the tribalist primate self-defense systems that have kicked in and perhaps meaningfully re-engage. Perhaps…

Will an invocation of the “swine maneuver” forestall adverse outcomes? Does the gatekeeper go away if he is called out on his behavior? Does it amount to pouring oil into the fire (not unlikely, as most pigs can read, these days. Although it could go either way)? That is an empirical question.

On a final note, teaching pigs to fly hasn’t gotten any easier in the internet age. Preaching to people who already share your beliefs is easy. What is hard is to have a productive discussion with someone who empathically does not share your fundamental premises and perhaps effect positive change. Mostly, these exchanges just devolve into name-calling. Not useful.

To be clear, we are talking about proverbial pigs here. Actual pigs are much more cognitively and socially adept (not to mention cleaner and less lazy but perhaps less happy) than most cultures – and religions – give them credit for (they are probably maligned to help rationalize eating them).

NOTE: Putin himself (however one feels about his politics) has weighed in on the issue and aptly characterized some exchanges as fruitless:

“I’d rather not deal with such questions, because anyway it’s like shearing a pig – lots of screams but little wool“.

A somewhat similar – but not quite the same – situation seems to be given in the avian world:

“If you want to soar with the eagles, you can’t flock with the turkeys” (as they say)